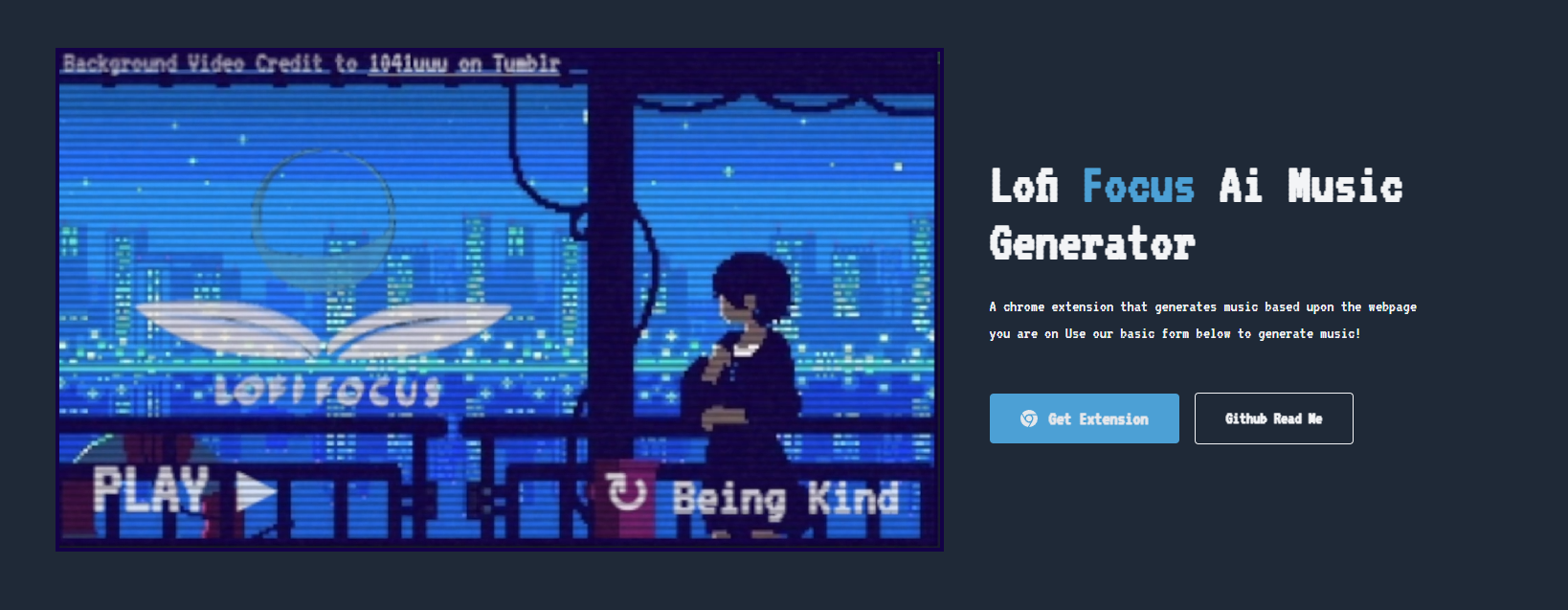

I’m excited to share how my team recently won 1st place at the AudioCraft 24-hour hackathon! Our winning project involved training a custom MusicGen model for generating lofi hip hop music. In this post, I’ll walk through how we created the training data, fine-tuned the model, and built a Flask API to serve our custom lofi MusicGen model using AudioCraft.

Our hackathon project: https://webenclave.com/2023/09/11/claiming-victory-at-the-audiocraft-24-hour-hackathon/

Creating the Training Data

The first step was gathering a dataset of high-quality lofi .wav files and matching text prompts. I built a helper/utility repo https://github.com/BChip/Audiocraft-MusicGen-DataModelPrep to simplify this process. Look at the README.md on how to use the scripts.

We sourced creative commons lofi tracks ourselves and split them into 30-second audio clips using the split_audio.py script.

The helper script will help creating the descriptions. Here are some descriptions we used as an example.

“slow chill hip hop beat with mellow piano”

or

“upbeat lofi with energetic drums”

Capturing the mood and essence of each clip is key for teaching MusicGen the nuances of lofi hip hop.

Fine-Tuning the Model

With our dataset ready, it was time to fine-tune the MusicGen model. The https://github.com/chavinlo/musicgen_trainer script made this easy.

The result was a MusicGen model specialized in generating lofi tunes based on text prompts!

Serving the Custom Model with Flask

To showcase our custom lofi MusicGen model, we built a HTTP API using AudioCraft and Flask.

The code handles:

- Validating the API parameters

- Loading the custom model

- Generating lofi music conditioned on the text prompt

- Returning the generated .wav file

This allows us to generate adaptive lofi tracks dynamically based on the user’s current web browsing.

Here is the code for the Flask + AudioCraft API:

https://github.com/KBVE/lofifocus-docker/blob/main/musicgen/app.py

Results

Our specialized model creates original lofi hip hop music that captures the essence of human-provided text prompts. The REST API integration with AudioCraft enabled seamless deployment.

Check out the project https://lablab.ai/event/audiocraft-24-hours-hackathon/kbve/lofi-focus to see the original project!

Fine-tuning AI models like MusicGen for specific audio domains unlocks exciting new possibilities. I had a blast working on this project and look forward to more audio AI innovations ahead.

Let me know if you have any other questions! I’m happy to provide more details on our hackathon winning strategy and code.